When a paper known to be generated by AI is not flagged, it is referred to as a false negative. While many assume this indicates a failure of the software, the reality is more complex. False negatives are often an intentional byproduct of the way AI detectors are engineered. To maintain a high level of accuracy and protect students from wrongful accusations, Turnitin prioritizes the reduction of false positives—even if it means some AI content slips through the cracks.

What Are False Negatives in AI Detection?

In the context of AI writing detection, a false negative occurs when a piece of text was actually generated or significantly assisted by an AI tool, but the detector fails to identify it, instead labeling it as human-written.

To understand why this happens, we must recognize that AI detection is probabilistic rather than definitive. Unlike traditional plagiarism detection, which checks for direct matches in a database using a simple yes or no approach, AI detection evaluates writing patterns, sentence structure, and predictability.

False Positives vs. False Negatives

- False Positive: The detector flags human-written text as AI. This is the most “dangerous” error in academia, as it can lead to wrongful accusations of misconduct.

- False Negative: The detector fails to flag AI-written text. While this is a limitation of the tool, it does not carry the same immediate risk of harming an innocent student’s reputation.

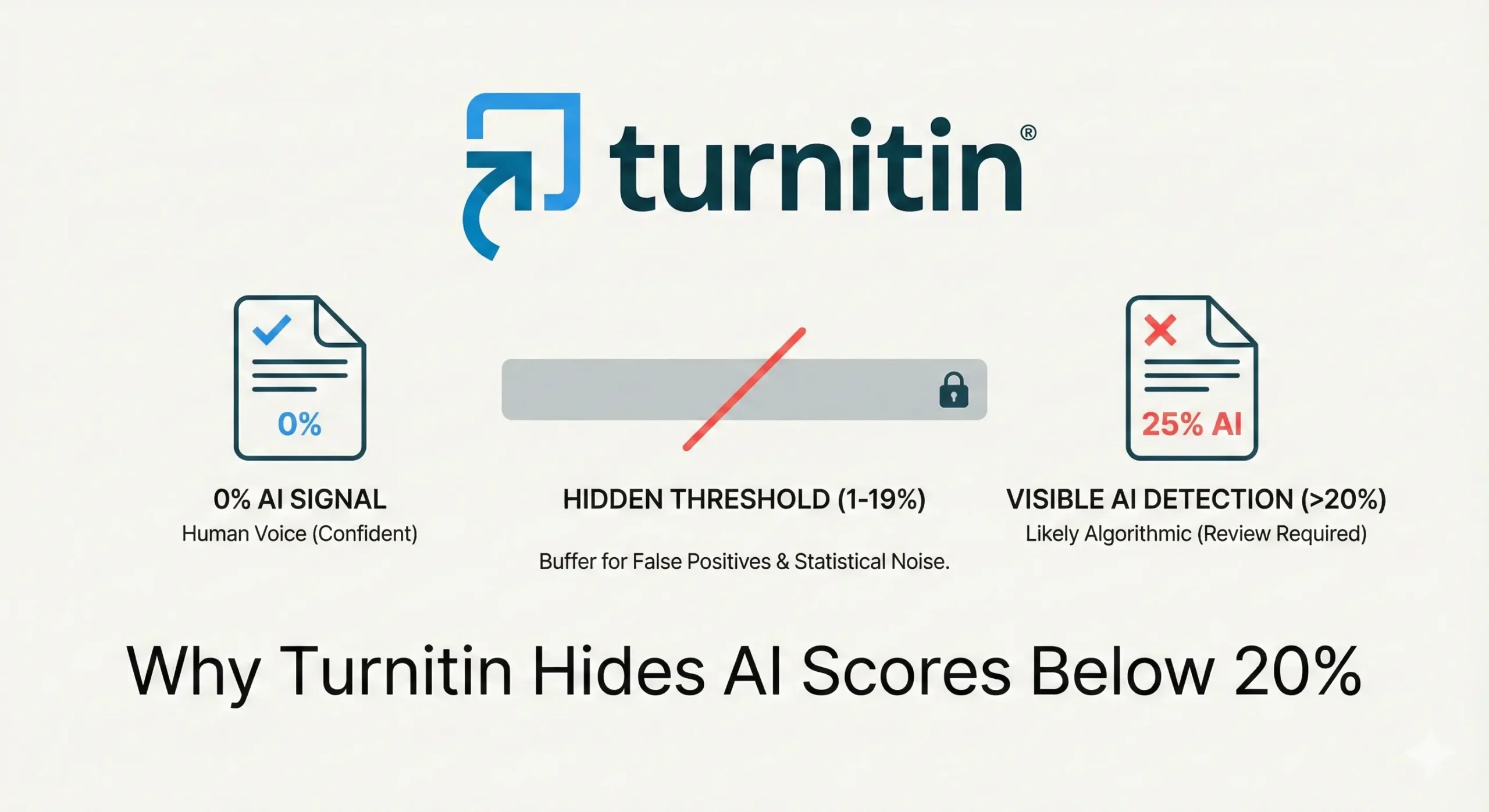

Because of the high stakes involved in academic integrity, Turnitin’s model is tuned to be “conservative.” It requires a high degree of statistical certainty before it applies an AI label. Consequently, “not detected” does not necessarily mean “human-written”; it simply means the AI signatures were not strong enough to meet the threshold for a confident flag.

Note: For more on the opposite side of this issue, read: False Positives in AI Detection: Why Human Writing Sometimes Gets Flagged as AI.

How Humanized or Edited AI Text Evades Detection

The “cat and mouse” game between AI writers and AI detectors is primarily played in the realm of editing and humanization. Raw output from an LLM usually carries distinct markers: high predictability, repetitive sentence structures, and a lack of “burstiness” (variation in sentence length and complexity).

However, these markers are easily diluted through several methods:

- Paraphrasing Tools: Tools like Quillbot or specialized “AI humanizers” rewrite AI text to alter its statistical signature.

- Manual Editing: When a student takes an AI-generated draft and manually changes words, adjusts the tone, or adds personal anecdotes, the “AI signal” becomes fragmented.

- Hybrid Writing: A workflow where a human writes the thesis and outline, and the AI fills in the gaps, often produces a document that blends human and machine patterns so thoroughly that detection becomes nearly impossible.

Further reading links: How to Write Human-Like Text That Passes AI Detection and Can Turnitin Detect AI Humanizing Tools Content?

Short Text, Long Text, and Detection Gaps

The length and structure of a document play a crucial role in Turnitin AI accuracy issues. AI detection thrives on data; the more text there is, the more patterns the detector can analyze to form a confident conclusion.

- Short Passages: If a student uses AI to generate only a single paragraph or a few sentences within a much larger human-written work, the detector may not have enough data points to trigger a flag. This is why fragmented AI use is significantly harder to detect than a fully generated essay.

- List-Heavy or Technical Content: AI detectors struggle with highly structured text, such as lists, bibliographies, or dense technical specifications. Because these forms of writing are naturally less “creative” and more predictable, they can mimic the statistical patterns of AI, leading Turnitin to ignore them to avoid false positives.

Why Turnitin Accepts Some False Negatives by Design

It may seem counterintuitive for a security company to allow “leaks” in its detection system. However, in the world of academic technology, precision (avoiding false flags) is far more important than recall (catching every instance).

The Ethical and Legal Risk

If Turnitin were to become more “aggressive” in its detection, it would inevitably flag more human-written papers as AI. For a student, a false accusation of AI use can lead to:

- Failed assignments or courses.

- Disciplinary hearings.

- Long-term damage to academic reputation.

By prioritizing a lower false positive rate (which Turnitin claims is under 1% for documents with more than 20% AI), they must accept a higher rate of false negatives. In other words, Turnitin believes it is better for ten AI-written papers to go undetected than for one human-written paper to be wrongly accused.

What Students and Educators Should Understand About Undetected AI

For students, the “success” of a false negative—passing an AI check with a 0% score despite using AI—is often a hollow victory. Academic integrity is about the acquisition of knowledge and the development of critical thinking skills.

Why Transparency Matters

Instead of focusing on how to “beat” the detector, the conversation should shift toward transparency. Many universities are now allowing AI for brainstorming or research, provided it is properly cited.

Furthermore, relying on the fact that Turnitin might miss AI content is a high-risk strategy. AI detection technology is constantly being updated. A paper that passes today might be re-scanned in the future with a more advanced model, potentially leading to retroactive disciplinary action.

Final Thoughts — False Negatives Are a Feature, Not a Bug

In the final analysis, false negatives in Turnitin AI reports are an inherent part of the current technological landscape. They represent the “safe side” of a difficult statistical balance. Turnitin’s design acknowledges that human writing is diverse and that protecting the innocent is more important than catching every rule-breaker.

As AI models become more sophisticated, the gap between human and machine writing will continue to shrink. This makes human judgment more important than ever. Whether you are an educator or a student, remember that a Turnitin report is a tool for starting a conversation, not a final verdict.

Frequently Asked Questions (FAQs)

A false negative occurs when the software analyzes AI-generated text but fails to identify it as such, returning a low or 0% AI score. Essentially, the machine-generated patterns are present, but the detector classifies them as human-written.

Turnitin misses AI content primarily because it prioritizes avoiding false positives. If the AI “signal” is weak—often due to manual editing, the use of humanization tools, or the text passage being too short—the system will intentionally refrain from flagging it to avoid a wrongful accusation of misconduct.

Not necessarily. A 0% score simply means the system did not find enough statistical evidence to confidently label the text as AI. While this often indicates human authorship, it can also be a “false negative” where AI was used but successfully evaded detection through various paraphrasing techniques.

In an academic setting, yes. A false positive can result in a student being unfairly punished for work they actually wrote. A false negative, while a limitation of the tool’s effectiveness, does not carry the same risk of life-altering administrative injustice or legal liability for the institution.

No. Expert consensus, including official guidance from Turnitin, suggests that AI detectors should only be used as one data point. They must be combined with an educator’s knowledge of a student’s typical writing style, oral defenses, and a review of the student’s drafting process.

Was this helpful?